The Trolley Problem: Will Our Cars Grow up to Be Heroes?

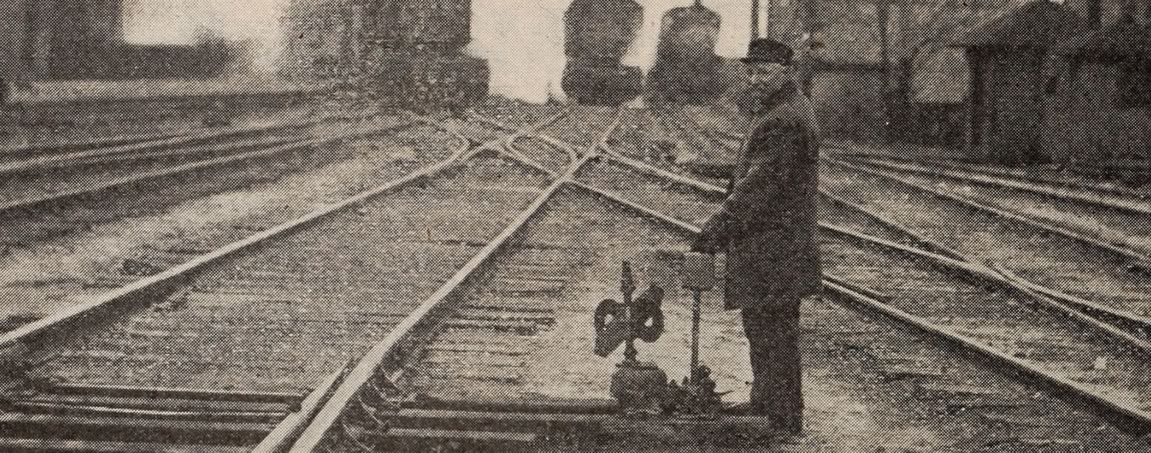

Next year marks the 50th anniversary of one of the world’s most famous ‘thought experiments’. It may lack the pop-cultural punch of ‘Schrödinger’s famous cat‘, but it still stands out as an absolute zinger – it’s called ‘The Trolley Problem‘.

Phillipa Foote first sketched out the idea in 1967 and it was a philosophical question designed to probe at the soft underbelly of your ethics. Many subtle variations have sprung up since, but the core scenario goes like this:

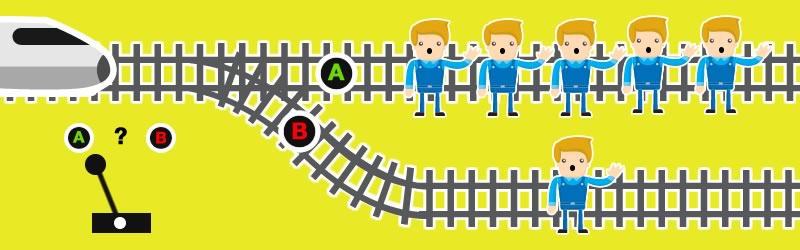

- Imagine a runaway trolley car (or train) is careering down a track towards a group of five workers. You have no way to warn them and they will most certainly all die without some kind of intervention.

- You have control of a switch lever that can divert the trolley into a side-track, instantly saving our five workers. However, there’s another person standing on that side-track who will now have no opportunity to escape a ‘hurtling trolley death’.

What Would You Do?

- Do nothing, witness five deaths, but bear no direct responsibility for the loss of life.

- Or take action, save five lives, but personally bring about the death of another human.

It’s a tough problem and there’s no easy or unequivocally right answer. My daughter wanted to shout at them all to move but that’s not an option.

For what it’s worth, most people choose to sacrifice the single person. The greater good.

However, the most common variation introduces a new person and is rather uncharitably called ‘The Fat Man’ scenario. In this story, you can choose to save the workers by – as nasty as it might sound – pushing a rather generously proportioned man in front of the oncoming trolley.

Unsurprisingly, most people are appalled by this idea. Regardless of the upside, surely killing an innocent man by pushing him in front of a train is the act of a monster?!

Of course, in a strictly mathematical sense, the only difference between the two stories above, is the method by which you choose to dispatch the unfortunate person. Levers are certainly much cleaner than a well-timed elbow. Does the method matter or is it all about the outcome?

If you’re having trouble getting your head around the idea, Harry Shearer – he of Simpson’s and Spinal Tap fame – made a great video for BBC Radio 4 in 2014 that explains the problem brilliantly.

Lucky That Thought Experiments are Harmless, Right?

Of course, this is all just theory. Brain games. A mischievous pub conversation or, at worst, a chance for philosophy nerds to show off at dinner parties.

Not quite.

Google self-driving car.

As we’re all aware, most of the world’s major car companies are investing in driverless technology. We know these systems are already safer that the average human driver. Unlike us, robot cars are built to scan surrounding traffic thousands of times every second and instantly adjust to changes.

But they can’t predict everything. Tires fail catastrophically. Trees fall unpredictably. Drivers suffer seizures. Very occasionally driverless cars are going to be in their own ‘Trolley Car’ scenarios. And – presumably – software developers are currently writing the decision algorithms to handle them. Set a ‘0’ and we go straight – set a ‘1’ and we turn.

It raises some heavy moral questions.

- If you paid for a car, would you expect it to prioritize your safety over others?

- Is it a pure numbers game of ‘save the most humans’?

- Should the age of the people make a difference? Perhaps babies get preference?

- Should the car be taking into account the estimated body weights of potential accident victims?

- Should prestige cars make different decisions to economy cars?

- Would Donald Trump’s car accelerate? (jk)

Are software engineers even equipped to take on these kinds of tricky ethical questions? It’s certainly not part of most computer science courses. Perhaps Google, Volvo, and Ford need to be hiring more philosophers and ethicists.

With currently only a few hundred self-driving cars on the planet, these questions still falls close to the realm of the ‘thought experiment’.

But 10 years from now there could be millions of self-driving cars making life or death decisions every day. Things are about to get ‘real’– fast.

May you live in interesting times.